Assessment: formative assessment, summative assessment, universal screeners, benchmarks, anecdotal observations, state tests, etc., etc., etc. There are a million different types of it, so how are we as educators supposed to know how to take all of these different data points about students and use this massive amount of information to know where to go in our instruction? As a literacy coach, one of the items in my job description is to organize literacy data. After being in this position for three years, and teaching Language Arts for all seven years of my teaching career, I can honestly say that there hasn’t been a year where we have looked at literacy data the same. This is a subject that I have shied away from blogging too much about because it is always changing, and every district looks at literacy data in different ways. In this blog post, I’m going to attempt to articulate my thoughts on how I look at literacy data in an educational atmosphere where data is all over the place.

Benchmark Assessment: Whether your district uses the QRI, DRA, or F&P’s Benchmark Assessment, it is so important to start your year off looking at who your students are as readers. In my district, we use Fountas and Pinnell’s Benchmark Assessment. I treasure the time at the beginning of the year listening to my students read and analyzing what is going well for them as a reader and what they will need to work on throughout the school year. This assessment lays my foundation for planning out guided reading, literature circles, and Reading Worskhop minilessons. It is also a chance for me to be one-on-one with a students and to deeply understand where they are at with their fluency, if they self monitor their reading, what types of words trip them up, if they understand what they read, and where in their comprehension (within, beyond, and about) they are strong and weak. Taking the time to do benchmarks sometimes gets a bad rap.

People say that it takes too much time out of their instruction to complete the benchmarks. There is no doubt that doing benchmarks on each student takes time, but the analogy that I use to think about why it’s important to take the time is the thought of going to the grocery store with and without a list. If I go without, I wander the aisles aimlessly, buying things I don’t need only to get home and realize that I bought doubles of what I already had while forgetting other essentials that will probably require me to go BACK to the grocery store. On the other hand, taking the time to make a list before going to the grocery store, checking the fridge and my cupboards, can be time consuming and slightly annoying, but once I get to the grocery store I am focused and efficient, buying what I need while getting in and then out. Doing benchmark assessment with your students versus not doing benchmark assessments with your students at the beginning of the year is the same thing. Doing a benchmark assessment is like writing up your grocery list. You know where to go with students because you know who they are as readers. Not doing a benchmark at the beginning of the year is like not making the list, you may work with the student at things they are already strong in as readers while missing vital pieces that they need and/or instructing them at an inappropriate guided reading level all together. Students don’t magically make growth as readers without expert teachers who take the time to make the grocery list and know where to go without wandering aimlessly.

Benchmarks can be used the wrong way. If a teacher is doing them just to do them and get a level, they are only being used to a fraction of the magnificent tool that they are. When used right, a teacher is starting the school year out with taking a huge first step in knowing their students as readers. This is the foundation of putting the assessment tools together. Click here to check out my “Benchmark Assessment Helpers” to help you gather the data you need to make the best use out of this fabulous assessment tool.

Universal Screeners: Once again, there are a ton of them out there: STAR, MAPS, etc. My district uses the STAR assessment, and it’s a great way to get some initial data on students and monitor their growth over time. It is a data point that, combined with other data points, can move a teacher forward in helping to paint the picture of students as readers. Universal screeners can be dangerous because they are seemingly easy data points to get and clear cut data points to understand. In the world of literacy, however, I feel we have to be VERY careful to not use universal screeners to drive our instruction, shove students into a reading intervention that isn’t appropriate for them, or use this piece of data as the one and only piece to look at during data meetings. It’s an important piece in the data picture, but it’s just that: one piece. As literacy teachers, coaches, and coordinators, we have to continually educate others who want to see this as the only data piece that many more pieces are required to complete the puzzle.

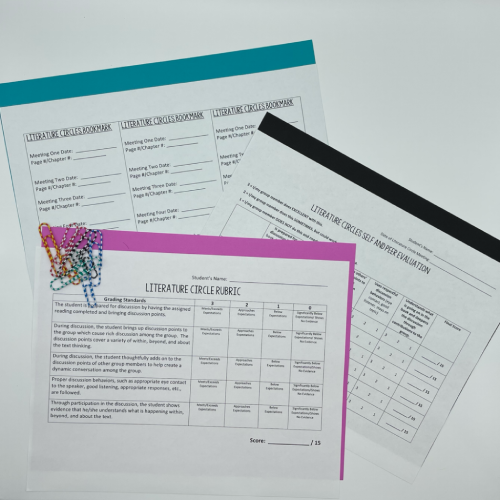

Classroom Assessments: Exit slips, students’ writing about reading, writing pieces, quizzes, student self-assessments, etc. All of the assessments done at the classroom level are SO important. No matter what a test says about who a student is as a reader or writer, classroom assessments are the consistent evidence that teachers need to look at to truly get to know their students as readers and monitor their progress over time. As a classroom teacher, when deciding what to grade/what not to grade, I usually ask myself the question: Will this help me learn more about this student as a reader/writer? If the answer is no, I don’t grade it. If the answer is yes, I do. When grading writing especially, I love to keep a sheet of paper by me with three columns: Individuals, Small Groups, Whole Class. As I’m reading their writing, I’m also jotting down teaching points to check in with individual students during writing conferences, guided writing topics for small groups of students, and big things that I am seeing a majority of my class do that would make for awesome whole class minilessons in Writing Workshop. Consistent, clear rubrics are also key to comparing students data over time and also comparing students data with other literacy classes in your grade level. Another piece of classroom assessments that often gets overlooked is having students complete self-assessments periodically to know how they view themselves as readers and writers. When students believe their voices are being heard, it’s amazing what they will reveal in these to help teachers better understand them and how to instruct them.

Anecdotal Notes: When I do get the chance to work with students in small groups during guided reading/guided writing and one-on-one during reading and writing conferences, I try to take meaningful anecdotal notes that will help me determine best next steps for these students as readers and writers. This also includes reading records. Anecdotal notes should be and are essential to quality data meetings on students. It is the hard evidence of what students are able to do inside a classroom as readers and writers on a daily basis.

All the Other Stuff: State tests are a part of our reality in the education world. That’s just how it is. I’m not going to spend much time writing about them in this blog post because I don’t spend much time worrying about them during the school year. I believe that if you’re using the assessments that I mentioned above in a meaningful way to inform your instruction, then your students should be independent thinkers who believe in themselves as readers and writers. Therefore, the test should go okay. End of story. I feel sad for states like New York where this is not their reality, but that is a whole separate blog post.

Here is my bottom line with this blog post: we need it all. As educators, we need to know where each piece of assessment fits into the big picture. We also need to know when a certain type of assessment is overpowering other types, or when some of the most important types of assessment are being overlooked. If data meetings are all about looking at universal screener data and state test data, then I doubt you’re talking much about who the student actually is, what reading/writing behaviors he/she exhibits, next steps for that student, etc. And if you are only looking at those data points and talking about these things, I would argue that you are making important decisions using only a few pieces of the puzzle. TEACHERS are the most important piece of the data conversation. The knowledge you hold in your heads about students as readers and writers based on administering the benchmark, spending time with students in guided reading and guided writing, conferencing with them, and reading their work on formative assessments on a consistent basis is the most important source of data. It won’t be found on a computer system or specialized school report. It’s found in the heads of the people who work with students day in and day out.